AI has been witnessing a monumental growth in bridging the gap between the capabilities of humans and machines. Researchers and enthusiasts alike, forg on numerous aspects of the field to make amazing things happen. One of many such areas is the domain of Information processing system Vision.

The docket for this theater is to enable machines to vie w the world as humans do, perceive it in a similar manner and even use the knowledge for a masses of tasks much as Image & Video recognition, Image Analysis & Compartmentalization, Media Recreation, Recommendation Systems, Tongue Processing, etc. The advancements in Electronic computer Imagination with Abysmal Learning has been constructed and perfected with time, primarily over one detail algorithmic program — a Convolutional Nervous Meshing.

Introduction

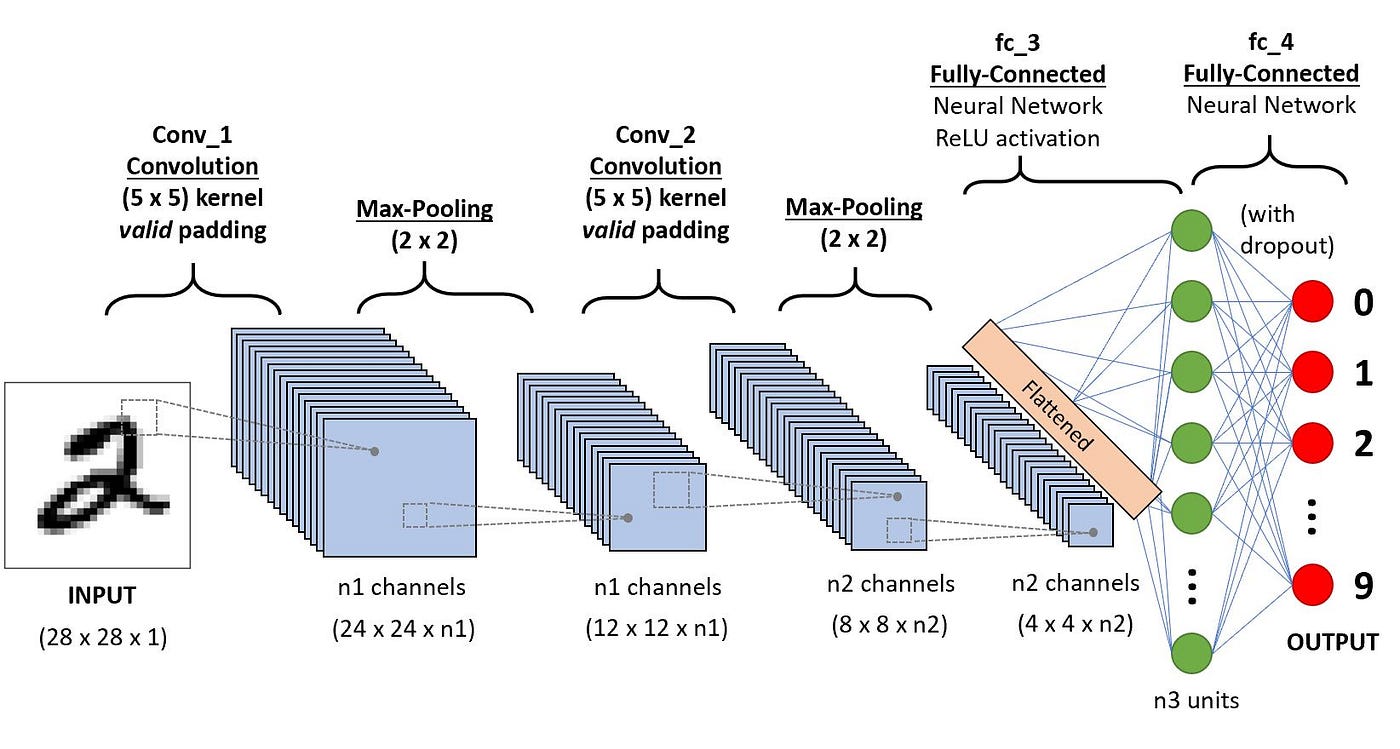

A Convolutional System Network (ConvNet/CNN) is a Deep Learning algorithm which can take in an stimulation image, assign importance (learnable weights and biases) to diverse aspects/objects in the image and be able to differentiate unmatchable from the some other. The pre-processing needed in a ConvNet is much lower as compared to past classification algorithms. While in primitive methods filters are hand-engineered, with enough training, ConvNets have the power to learn these filters/characteristics.

The architecture of a ConvNet is analogous to that of the connectivity pattern of Neurons in the Anthropomorphous Einstein and was divine aside the arrangement of the Optical Cortex. Individual neurons answer to stimuli only in a restricted region of the field of regard known arsenic the Receptive Field. A collection of so much William Claude Dukenfield overlap to back the entire visual area.

Wherefore ConvNets over Feed-Forward Somatic cell Nets?

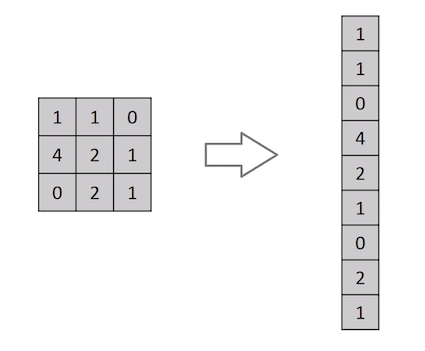

An image is zipp but a matrix of pixel values, right? So why not just flatten the project (e.g. 3x3 paradigm matrix into a 9x1 vector) and feed in IT to a Multi-Level Perceptron for classification purposes? Uh.. not really.

In cases of extremely basic binary images, the method might show an median preciseness score while performing anticipation of classes only would make little to no accuracy when it comes to complex images having pixel dependencies throughout.

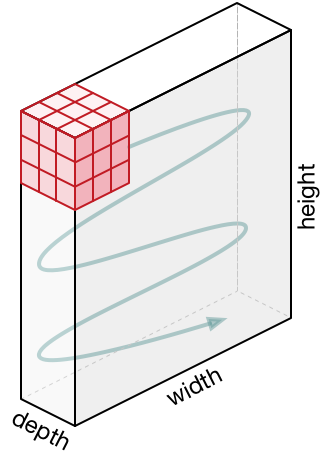

A ConvNet is able to successfully capture the Spatial and Temporal dependencies in an image through the application of at issue filters. The architecture performs a better fitting to the figure dataset owed to the reduction in the number of parameters involved and reusability of weights. In other words, the network nates be trained to understand the sophistication of the pictur healthier.

Stimulant Image

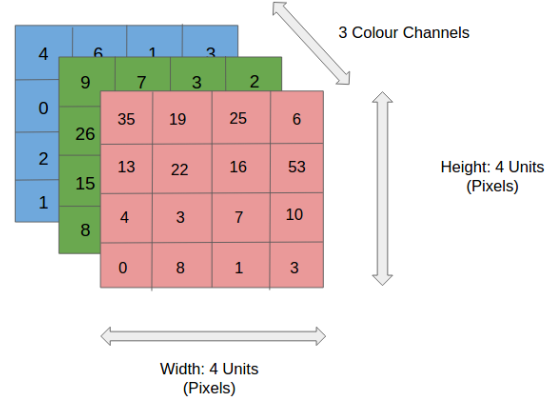

In the figure, we take over an RGB image which has been separated past its three color planes — Red, Green, and Blue. There are a number of so much color spaces in which images exist — Grayscale, RGB, HSV, CMYK, etc.

You can imagine how computationally intensifier things would get once the images reach dimensions, say 8K (7680×4320). The role of the ConvNet is to reduce the images into a form which is easier to process, without losing features which are critical for getting a good prediction. This is important when we are to innovation an architecture which is non only superb at learning features simply also is ascendible to big datasets.

Convolution Layer — The Kernel

Image Dimensions = 5 (Tallness) x 5 (Breadth) x 1 (Number of channels, eg. RGB)

In the preceding presentment, the green section resembles our 5x5x1 input image, I. The element complex in carrying out the convolution operation in the first part of a Convolutional Layer is called the Substance/Filter, K, represented in the vividness yellow. We induce elite K every bit a 3x3x1 matrix.

Kernel/Filter, K = 1 0 1

0 1 0

1 0 1

The Kernel shifts 9 multiplication because of Stride Duration = 1 (Non-Strided), every time performing a matrix multiplication military operation between K and the portion P of the image concluded which the kernel is hovering.

The filter moves to the opportune with a certain Stride Time value till it parses the complete width. Moving on, it hop pull down to the beginning (left) of the image with the same Footstep Value and repeats the process until the entire image is traversed.

In the case of images with triple channels (e.g. RGB), the Kernel has the same depth as that of the stimulus image. Matrix Multiplication is performed between Kn and In stack ([K1, I1]; [K2, I2]; [K3, I3]) and all the results are summed with the bias to give United States of America a squashed one-depth channel Involved Feature Output.

The objective of the Convolution Operation is to extract the mellow-level features so much as edges, from the stimulus project. ConvNets need not be limited to only one and only Convolutional Bed. Conventionally, the first ConvLayer is causative capturing the Low-Level features such as edges, color, gradient orientation, etc. With added layers, the architecture adapts to the High-Level features as well, giving United States of America a web which has the nutritious understanding of images in the dataset, similar to how we would.

At that place are 2 types of results to the operation — one in which the convolved feature is reduced in dimensionality as compared to the input signal, and the other in which the dimensionality is either enhanced or remains the same. This is done by applying Valid Cushioning in case of the former, or Same Padding in the case of the latter.

When we augment the 5x5x1 image into a 6x6x1 image and and so apply the 3x3x1 kernel over it, we find that the convolved matrix turns intent on be of dimensions 5x5x1. Hence the name — Same Padding.

But then, if we perform the same operation without padding, we are presented with a matrix which has dimensions of the Kernel (3x3x1) itself — Valid Padding.

The following repository houses many such GIFs which would help you incur a better understanding of how Cushioning and Stride Length work together to achieve results relevant to our needs.

Pooling Layer

Corresponding to the Convolutional Layer, the Pooling layer is responsible for reducing the spacial size of the Convolved Feature article. This is to reduction the computational power required to process the data through dimensionality reduction. Moreover, it is effective for extracting possessive features which are rotational and positional invariant, so maintaining the process of effectively training of the model.

On that point are two types of Pooling: Max Pooling and Average Pooling. Max Pooling returns the maximum valuate from the luck of the image covered by the Kernel. On the other hand, Average Pooling returns the average of all the values from the portion of the image mud-beplastered by the Kernel.

Georgia home boy Pooling also performs as a Haphazardness Suppressant. It discards the noisy activations altogether and also performs de-noising along with dimensionality reduction. On the separate hand, Average Pooling simply performs dimensionality reduction as a noise suppressing mechanism. Hence, we can tell that Max Pooling performs a lot healthier than Average Pooling.

The Convolutional Layer and the Pooling Layer, together soma the i-th layer of a Convolutional Neural Network. Depending on the complexities in the images, the number of much layers May be increased for capturing double-bass-levels inside information smooth further, but at the cost of more computational powerfulness.

After going finished the above process, we have with success enabled the worthy to understand the features. Riding on, we are going to drop the final exam output and give it to a regular System Network for classification purposes.

Classification — To the full Affined Bed (FC Layer)

Adding a Amply-Connected layer is a (usually) sixpenny way of learning not-linear combinations of the high-level features as represented by the yield of the convolutional layer. The Fully-Connected level is learning a possibly non-linear function in this space.

Now that we have converted our input image into a suitable var. for our Multi-Level Perceptron, we shall flatten the image into a column transmitter. The flattened output is fed to a feed-forward somatic cell network and backpropagation applied to every looping of grooming. Complete a series of epochs, the model is able-bodied to distinguish between dominating and certain low-level features in images and classify them using the Softmax Categorisation technique.

There are various architectures of CNNs available which have been describe in building algorithms which power and shall power AI every bit a whole in the foreseeable future. Some of them take been listed on a lower floor:

- LeNet

- AlexNet

- VGGNet

- GoogLeNet

- ResNet

- ZFNet

A Convolutional Forward and Back-projection Model for Fan-beam Geometry

Source: https://towardsdatascience.com/a-comprehensive-guide-to-convolutional-neural-networks-the-eli5-way-3bd2b1164a53